Resources

LLM Attributes Every CISO Should Track Today

By Sarang Warudkar - Sr. Technical PMM (CASB & AI)

February 18, 2026 5 Minute Read

AI tools now influence daily work across writing, analysis, meeting automation and software development. This rapid adoption delivers strong productivity gains, yet it introduces a new security surface driven by the behaviour of large language models (LLMs). Traditional SaaS checks are no longer enough. CISOs now evaluate the application and the model behind it with equal care.

This blog outlines the LLM attributes that shape enterprise risk and the practical steps security leaders can use to govern them.

Why LLM Attributes Matter

Most AI SaaS products embed third party LLMs. This creates a dual dependency. The app may pass every security check, but the model underneath can still expose data, produce unsafe code or leak sensitive workflows. A clear view of the LLM layer closes this gap and brings AI governance in line with modern threats.

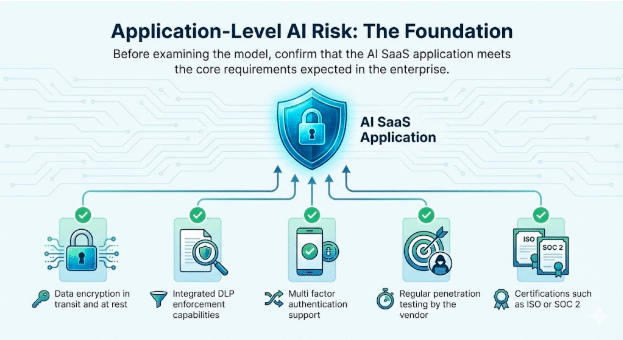

1. Application-Level AI Risk: The Foundation

Before examining the model, confirm that the AI SaaS application meets the core requirements expected in the enterprise.

Baseline attributes to verify

- Data encryption in transit and at rest

- Integrated DLP enforcement capabilities

- Multi factor authentication support

- Regular penetration testing by the vendor

- Certifications such as ISO or SOC 2

These checks remain essential. They establish trust in the service itself and create the foundation for a safe AI rollout.

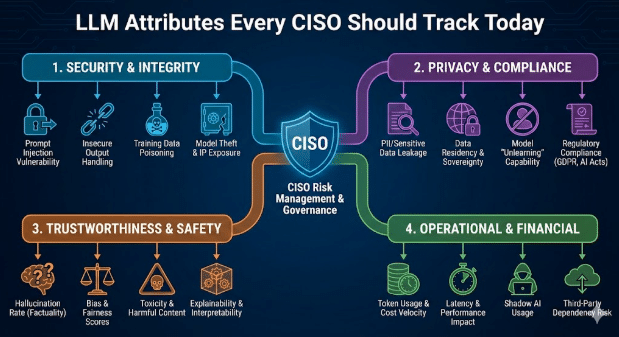

2. LLM Behavior: The Source of the New Risk Surface

LLM attributes directly influence how the application responds to real users and adversarial prompts. CISOs now track the following behaviours across every embedded model.

GenAI and LLM Integration

Identify the exact model, hosting region, update pattern and whether the vendor fine tunes it using customer data.

Jailbreak Exposure

Jailbreaks occur when users manipulate prompts to bypass LLM safety controls. Common techniques include role playing, layered instructions, fictional framing, and indirect or encoded language. Because these interactions resemble normal usage, they often evade basic controls. Tracking jailbreak attempts helps security teams detect misuse early, strengthen guardrails, and demonstrate proactive governance during audits.

Example

- An attacker convinces a chatbot to explain how to disable MFA on a customer account. This opens a direct path to sensitive data and breaks identity governance inside a workflow that appears legitimate.

Malware and Harmful Code Generation

LLMs sometimes produce code that embeds hidden execution paths or exfiltration routines.

Example

- An engineer pastes AI-suggested code into an internal service. The code contains a covert data leak. This triggers an undetected breach because the logic appears valid.

Toxicity, Bias and CBRN Output Risks

Models can generate unsafe text when presented with sensitive or adversarial prompts. Track these behaviors for business units where safety, compliance or physical risk is in scope.

Examples

- Toxicity: An internal support chatbot responds to an escalated customer complaint with abusive or threatening language after being fed hostile prompts, creating HR and brand risk when the transcript is logged or shared.

- Bias: A hiring team uses an LLM to rank candidates, and the model consistently downgrades profiles containing gender or caste indicators, exposing the organization to discrimination and audit findings.

- CBRN Output Risks: An R and D user asks an LLM about improving a chemical process, and the model returns step by step guidance that aligns with restricted chemical synthesis, creating regulatory and physical safety exposure.

Alignment with NIST and OWASP LLM Guidance

NIST has released a Generative AI Profile that is quickly becoming the baseline for responsible AI risk management. Security and compliance teams now expect every LLM based application to map clearly to these expectations.

Enterprises are asking direct questions

- Who is accountable for how the AI is used and for its outcomes

- Which LLM use cases are explicitly allowed or prohibited

- Whether training and fine tuning data is transparently declared

- How risks such as prompt injection, hallucinations, and unsanctioned data access are handled

When these questions lack clear answers, audit exposure rises sharply. In regulated sectors such as healthcare and financial services, misalignment with NIST guidance increases the likelihood of data leakage, regulatory penalties, and failed audits.

In parallel, the OWASP Top 10 for LLM Applications documents a new class of vulnerabilities that extend beyond traditional application security concerns. These include prompt injection, insecure output handling, training data poisoning, and unsafe integrations.

Real incidents already reflect these risks. In one case, a SaaS application allowed users to upload documents for AI processing. An attacker embedded hidden instructions inside a file, the model executed them, and sensitive company data was exposed. These scenarios are occurring in live production environments, and organizations that do not actively track and mitigate them face material security risk.

Example

- A model without output validation leaks admin keys during a prompt injection attempt. This causes system-wide compromise despite strong app-level controls.

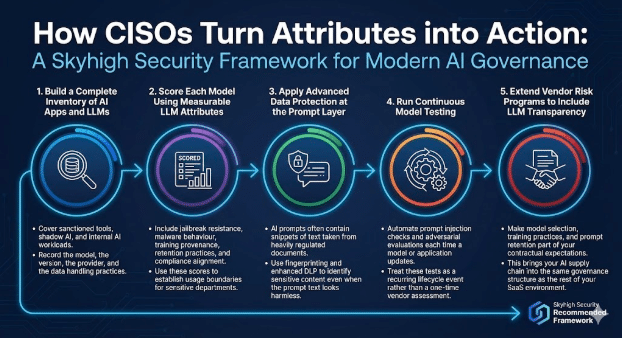

How CISOs Turn These Attributes into Action

A modern AI governance program blends application checks with model-aware controls.

Skyhigh Security recommends the following framework.

1. Build a Complete Inventory of AI Apps and LLMs

Cover sanctioned tools, shadow AI and internal AI workloads.

Record the model, the version, the provider and the data handling practices.

2. Score Each Model Using Measurable LLM Attributes

Include jailbreak resistance, malware behaviour, training provenance, retention practices and compliance alignment. Use these scores to establish usage boundaries for sensitive departments.

3. Apply Advanced Data Protection at the Prompt Layer

AI prompts often contain snippets of text taken from heavily regulated documents. Use fingerprinting and enhanced DLP to identify sensitive content even when the prompt text looks harmless.

4. Run Continuous Model Testing

Automate prompt injection checks and adversarial evaluations each time a model or application updates. Treat these tests as a recurring lifecycle event rather than a one-time vendor assessment.

5. Extend Vendor Risk Programs to Include LLM Transparency

Make model selection, training practices and prompt retention part of your contractual expectations. This brings your AI supply chain into the same governance structure as the rest of your SaaS environment.

The Path Ahead

LLMs reshape how enterprises use AI, and they reshape how CISOs evaluate risk.

In traditional application governance, security teams separate two concerns. The application must meet enterprise standards for access control, data protection, logging, and compliance. Independently, the underlying platform must meet reliability, security, and operational expectations.

LLMs introduce the same two layer responsibility, with higher stakes.

- At the application layer, organizations must govern how AI features are exposed to users. This includes who can access the capability, what data can be submitted, how outputs are shared, and how activity is logged for audit and investigation. These controls mirror familiar application security practices, extended into AI driven workflows.

- At the model layer, CISOs must assess how the LLM behaves under real world conditions. This includes how the model handles sensitive prompts, whether it produces unsafe or biased outputs, how it retains or reuses data, and how resilient it is to abuse such as prompt injection or data poisoning. These behaviours sit outside traditional app testing and require explicit governance.

Tracking LLM attributes such as data usage, safety behaviour, guardrail coverage, and failure patterns gives security leaders the same confidence they expect from mature application programs. With this visibility, CISOs can support wider AI adoption while maintaining security, privacy, and regulatory commitments across the enterprise.

About the Author

Sarang Warudkar

Sr. Technical PMM

Sarang Warudkar is a seasoned Product Marketing Manager with over 10+ years in cybersecurity, skilled in aligning technical innovation with market needs. He brings deep expertise in solutions like CASB, DLP, and AI-driven threat detection, driving impactful go-to-market strategies and customer engagement. Sarang holds an MBA from IIM Bangalore and an engineering degree from Pune University, combining technical and strategic insight.

Related Content

Trending Blogs

LLM Attributes Every CISO Should Track Today

Sarang Warudkar February 18, 2026

From DPDPA Requirements to Data Visibility: The DSPM Imperative

Niharika Ray and Sarang Warudkar February 12, 2026

Skyhigh Security Q4 2025: Sharper control, clearer visibility, and faster action across data, web, and cloud

Thyaga Vasudevan January 21, 2026

The Hidden GenAI Risk That Could Cost Your Company Millions (And How to Fix It Today)

Jesse Grindeland December 18, 2025

Skyhigh Security Predictions: 2026 Is the Year AI Forces a New Blueprint for Enterprise Security

Thyaga Vasudevan December 12, 2025