Tokenization vs. Encryption

Tokenization and encryption are often mentioned together as means to secure information when it’s being transmitted on the Internet or stored at rest. In addition to helping to meet your organization’s own data security policies, they can both help satisfy regulatory requirements such as those under PCI DSS, HIPAA-HITECH, GLBA, ITAR, and the EU GDPR. While tokenization and encryption are both effective data obfuscation technologies, they are not the same thing, and they are not interchangeable. Each technology has its own strengths and weaknesses, and based on these, one or the other should be the preferred method to secure data under different circumstances. In some cases, such as with electronic payment data, both encryption and tokenization are used to secure the end-to-end process.

| Encryption | Tokenization |

|---|---|

|

|

|

|

|

|

|

|

|

|

|

|

Encryption Definition

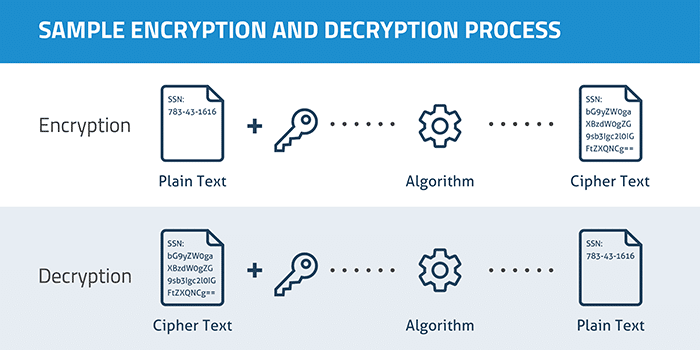

Encryption is the process of using an algorithm to transform plain text information into a non-readable form called ciphertext. An algorithm and an encryption key are required to decrypt the information and return it to its original plain text format. Today, SSL encryption is commonly used to protect information as it’s transmitted on the Internet. Using built-in encryption capabilities of operating systems or third party encryption tools, millions of people encrypt data on their computers to protect against the accidental loss of sensitive data in the event their computer is stolen. And encryption can be used to thwart government surveillance and theft of sensitive corporate data.

“Encryption works. Properly implemented strong crypto systems are one of the few things that you can rely on.” – Edward Snowden

In asymmetric key encryption (also called public-key encryption), two different keys are used for the encryption and decryption processes. The public key can be freely distributed since it is only used to lock the data and never to unlock it. For example, a merchant can use a public key to encrypt payment data before sending a transaction to be authorized by a payment processing company. The latter company would need to have the private key to decrypt the card data to process the payment. Asymmetric key encryption is also used to validate identity on the Internet using SSL certificates.

Regardless of what type of key is utilized, users of encryption typically practice regular key rotation in order to reduce the likelihood of a compromised key being used to decrypt all sensitive data. Rotating keys limits the amount of data that’s encrypted using a single key. In the event that an encryption key is compromised, only data encrypted with that key would be vulnerable.

Until now, one of the drawbacks of encrypting data within applications is that encryption breaks application functionality such as sorting and searching. Because cipher text is in a different format from the original data, encryption may also break field validation if an application requires specific formats within fields such as payment card numbers or email addresses. New order-preserving, format-preserving, and searchable encryption schemes are making it easier for organizations to protect their information without sacrificing end user functionality within business-critical applications. However, there is usually a tradeoff between application functionality and the strength of encryption.

Tokenization Definition

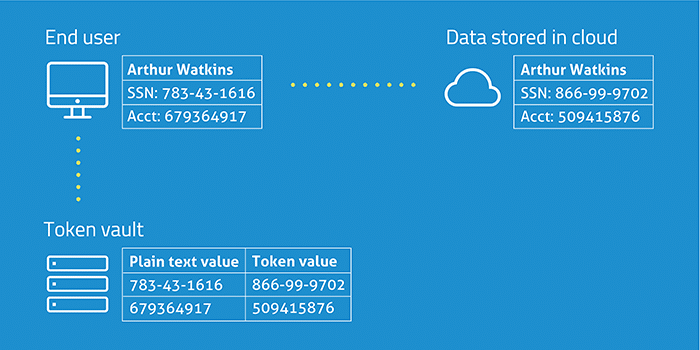

Tokenization is the process of turning a meaningful piece of data, such as an account number, into a random string of characters called a token that has no meaningful value if breached. Tokens serve as reference to the original data, but cannot be used to guess those values. That’s because, unlike encryption, tokenization does not use a mathematical process to transform the sensitive information into the token. There is no key, or algorithm, that can be used to derive the original data for a token. Instead, tokenization uses a database, called a token vault, which stores the relationship between the sensitive value and the token. The real data in the vault is then secured, often via encryption.

The token value can be used in various applications as a substitute for the real data. If the real data needs to be retrieved – for example, in the case of processing a recurring credit card payment – the token is submitted to the vault and the index is used to fetch the real value for use in the authorization process. To the end user, this operation is performed seamlessly by the browser or application nearly instantaneously. They’re likely not even aware that the data is stored in the cloud in a different format.

The advantage of tokens is that there is no mathematical relationship to the real data they represent. If they are breached, they have no meaning. No key can reverse them back to the real data values. Consideration can also be given to the design of a token to make it more useful. For example, the last four digits of a payment card number can be preserved in the token so that the tokenized number (or a portion of it) can be printed on the customer’s receipt so she can see a reference to her actual credit card number. The printed characters might be all asterisks plus those last four digits. In this case, the merchant only has a token, not a real card number, for security purposes.

Use Cases for Encryption and Tokenization

The most common use case for tokenization is protecting payment card data so that merchants can reduce their obligations under PCI DSS. Encryption can also be used to secure account data, but because the data is still present, albeit in ciphertext format, the organization must ensure the entire technology infrastructure used to store and transmit this data is fully compliant with PCI DSS requirements.

Increasingly, tokens are being used to secure other types of sensitive or personally identifiable information, including social security numbers, telephone numbers, email addresses, account numbers and so on. The backend systems of many organizations rely on Social Security numbers, passport numbers, and driver’s license numbers as unique identifiers. Since this unique identifier is woven into these systems, it’s very difficult to remove them. And these identifiers are also used to access information for billing, order status, and customer service. Tokenization is now being used to protect this data to maintain the functionality of backend systems without exposing PII to attackers.

While encryption can be used to secure structured fields such as those containing payment card data and PII, it can also used to secure unstructured data in the form of long textual passages, such as paragraphs or even entire documents. Encryption is also the ideal way to secure data exchanged with third parties and protect data and validate identity online, since the other party only needs a small encryption key. SSL or Secure Sockets Layer, the foundation of sharing data securely on the Internet today, relies on encryption to create a secure tunnel between the end user and the website. Asymmetric key encryption is also an important component of SSL certificates used to validate identity.

Encryption and tokenization are both regularly used today to protect data stored in cloud services or applications. Depending on the use case, an organization may use encryption, tokenization, or a combination of both to secure different types of data and meet different regulatory requirements. The Skyhigh Cloud Access Security Broker (CASB), for example, leverages an irreversible one-way process to tokenize user identifying information on premises and obfuscate enterprise identity.

As more data moves to the cloud, encryption and tokenization are being used to secure data stored in cloud services. Most notably, if a government agency subpoenas the data stored in the cloud, the service provider can only turn over encrypted or tokenized information with no way to unlock the real data. The same is true is a cyber criminal gains access to data stored in a cloud service.